Loading...

Searching...

No Matches

gradientdescent Namespace Reference

Functions | |

| int | derivative (float x) |

| float | func (int x) |

| float | learn (int x) |

Variables | |

| int | case = 99 |

| dict | grad = {} |

Function Documentation

◆ derivative()

| int gradientdescent.derivative | ( | float | x | ) |

Definition at line 2 of file gradientdescent.py.

2def derivative(x: float) -> int: # derivative of f(x) as given by the question

3 if x > 0:

4 return 1

5 elif x < 0:

6 return -1

7 else:

8 return 0 # given

9

10

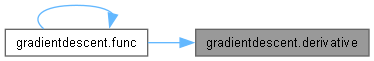

Referenced by func().

Here is the caller graph for this function:

◆ func()

| float gradientdescent.func | ( | int | x | ) |

Definition at line 15 of file gradientdescent.py.

15def func(x: int) -> float:

16 if x == 0: # initial state x^(0)

17 return 2.5

18 elif x in grad: # cache of x^(t)

19 return grad[x]

20 else: # not in cache

21 return func(x - 1) - learn(x - 1) * derivative(func(x - 1))

22

23

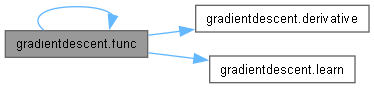

References derivative(), func(), and learn().

Referenced by func().

Here is the call graph for this function:

Here is the caller graph for this function:

◆ learn()

| float gradientdescent.learn | ( | int | x | ) |

Definition at line 11 of file gradientdescent.py.

11def learn(x: int) -> float: # learning rate n

12 return 1 / (x + 1)

13

14

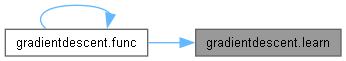

Referenced by func().

Here is the caller graph for this function:

Variable Documentation

◆ case

| int gradientdescent.case = 99 |

Definition at line 25 of file gradientdescent.py.

◆ grad

| dict gradientdescent.grad = {} |

Definition at line 24 of file gradientdescent.py.